Fill out the form to get more information about the Fullstack Academy bootcamp of your choice.

05.12.2025

Learn JavaScript for Free: 12 Online Resources for Every Level of Expertise

By The Fullstack Academy Team

JavaScript is one of the most popular programming languages in the world. If you’re interested in building web applications or becoming a software developer, learning JavaScript will help even if you’re an advanced programmer. Plus, it enables you to learn additional programming languages with ease.

Once considered a strictly client-side language, Node.js now allows JavaScript to run both the front-end and the back-end. That yields a lot of benefits, both for learning web development and in the job market.

But which JavaScript resource is for you? We’ve compiled a list of the best resources to learn JavaScript for free, whether you’re just starting out or you already know how to use booleans, strings, and data structures.

What is JavaScript?

JavaScript (JS) is a programming language that breathes life into websites, making them interactive and engaging. It lets you create interactive features like animations, clickable elements, dynamic content, and smooth transitions. Learning JavaScript is your first step to becoming a web development wizard, and you can get started for free!

Importance of JavaScript

JavaScript is a fundamental skill for anyone who wants to build modern web applications. Here's why it's so important:

Essential in Web Development: JavaScript is the foundation of modern web applications. JS is everywhere you look, from social media platforms to online games, making it a crucial programming language for aspiring web developers.

Makes Websites Interactive & Engaging: JavaScript is the key to creating dynamic and user-friendly web experiences. It lets you add features like animations, clickable elements, and real-time updates to keep users engaged.

Boosts Your Coding Career Prospects: JavaScript is one of the most in-demand programming languages. Mastering it can open doors to exciting coding careers and increase your earning potential.

Ensures a Strong Foundation for Learning Other Languages: Learning JS sets you on the right track for exploring other programming languages. Its core concepts are fairly universal as well as comprehensive, so mastering them gives you a head start in learning subsequent languages.

By investing time in learning JavaScript, you're not just acquiring a skill, you're unlocking a world of opportunity in the dynamic world of web development.

Ready to break into tech?

Learn the #1 programming language in 13 to 22 weeks with our live online coding bootcamp.

How to Learn JavaScript for Free

There are many ways to learn JavaScript for free such as online courses, books, coding challenges, groups and meetups, and many more. Here's your roadmap to learn JavaScript for free:

Explore Free Online Courses & Tutorials: Platforms like Codecademy, Udacity, and Mozilla Developer Network (MDN) offer high-quality, interactive lessons tailored to various learning styles. For beginners, Fullstack Academy’s Intro to Coding course could be a great starting point, amidst the other free online resources for learning JavaScript.

Embrace Interactive Coding Playgrounds: Experiment with JS code directly in your browser on CodePen or JSFiddle. No setup required - perfect for practicing and getting hands-on experience.

Join the Free Learning Communities: Immerse yourself in vibrant online JavaScript communities like Reddit's r/learnjavascript or Stack Overflow. Ask questions, share progress, and learn from fellow coding enthusiasts.

Leverage Free Books & Documentation: Dive deep with free JavaScript books like Eloquent JavaScript or JavaScript for Kids to solidify your understanding of core concepts.

Contribute to Open-Source Projects: Gain practical experience by contributing to open-source projects on GitHub. You'll collaborate with experienced developers and learn real-world coding practices.

Coding Challenges & Hackathons: Put your JavaScript skills to the test with free online coding challenges and hackathons. This is a fun way to practice, learn from others, and build a portfolio.

These are some of the best ways to learn JavaScript specifically crafted for beginner-level coding aspirants. Customize your learning path to fit your needs by pursuing the resources that best fit your schedule and skill level in coding.

Tips to Choose the Best JavaScript Courses

With a vast array of free JavaScript courses available, picking the right one can be overwhelming. Here are some key factors to consider to choose the best JavaScript course for you:

Learning Style: Do you learn best through interactive exercises, video tutorials, or hands-on projects? Many courses cater to different styles, so choose one that aligns with how you grasp information most effectively.

Skill Level: Are you a complete beginner or do you have some programming experience? Look for courses designed for your current skill set. Beginner courses focus on fundamentals, while intermediate or advanced courses delve deeper into specific topics.

Course Structure & Pace: Consider the course format (videos, text, quizzes) and learning pace. Some courses are self-paced, allowing you to learn on your schedule, while others offer a more structured schedule with deadlines. Choose one that fits your learning preferences and available time.

Community & Support: Does the course offer a supportive community or forum where you can ask questions and get help? This can be invaluable when you get stuck or need clarification on a concept. Look for courses with active communities or instructors who provide prompt assistance.

By keeping these factors in mind, you'll be well-equipped to choose the best free JavaScript course to launch your coding journey.

Ready to break into tech?

Learn the #1 programming language in 13 to 22 weeks with our live online coding bootcamp.

Beginner Javascript Courses

1. Fullstack Academy’s Intro to Coding

Fullstack Academy’s Intro to Coding course helps you learn the basics of HTML, CSS, and JavaScript. Designed for total beginners, the 15 hours of videos and challenges focus on the world’s most popular software languages and prepare you for more intensive learning through coding bootcamps such as Fullstack Academy Software Engineering Immersive.

2. JavaScript for Cats

Designed for complete JavaScript beginners, this comprehensive guide will help you get familiar with basic functions, libraries, data structures, and all other JavaScript fundamentals. Plus, it’s filled with helpful examples and visuals that demonstrate exactly how to ensure you’re comfortable using the different aspects, tools, and functions of JavaScript.

3. Codecademy’s Intro to JavaScript Track

If 0 is a pure beginner and 100 is a professional fullstack developer, 15 to 20 hours of Codecademy’s JavaScript track will take you all the way to 6.8. In all seriousness, Codecademy’s step-by-step tutorial system is great for an introduction to programming in JavaScript. You will learn functions, loops, data structures, and many other data types. It also has other online courses for many other languages like CSS, HTML, SQL, and Python.

4. Treehouse’s JavaScript Basics

Treehouse offers a multi-platform learning experience that includes videos, coding tutorials, and quizzes. If you’re looking to gain a solid foundation in a short amount of time, the 14-day free trial might suit your needs. The benefits of this four-hour course will help you understand where JavaScript is used, basic concepts for variables, data types, and conditional statements, and how to troubleshoot programming problems.

5. MDN JavaScript

Published by Mozilla, this site incorporates tutorials and lessons in addition to a glossary of JavaScript functions. This could be a good tab to have open next time you’re attempting those codewars.com challenges. MDN JavaScript is offered in many other languages and it's a great refresher of the JavaScript programming language.

6. Learn-JS

Learn-JS.org is intended for everyone who wishes to learn the JavaScript programming language. This website is an interactive JavaScript tutorial where you can run free JavaScript code directly from the web browser. You are able to try JavaScript without even installing it. Here you can learn the basics, take advanced tutorials, or help others learn by contributing to tutorials.

Ready to break into tech?

Learn the #1 programming language in 13 to 22 weeks with our live online coding bootcamp.

Intermediate Javascript Courses

1. CoderByte

CoderByte challenges are an excellent resource, especially if you want to apply to a more selective coding bootcamp as part of a career transition. These coding problems are a pretty accurate representation of the challenges on the Fullstack application’s technical coding assessment. Keep in mind that the beginner-level challenges are still pretty hard. If you find these too difficult, review the beginner resources listed above.

2. Eloquent JavaScript by Marijn Haverbeke

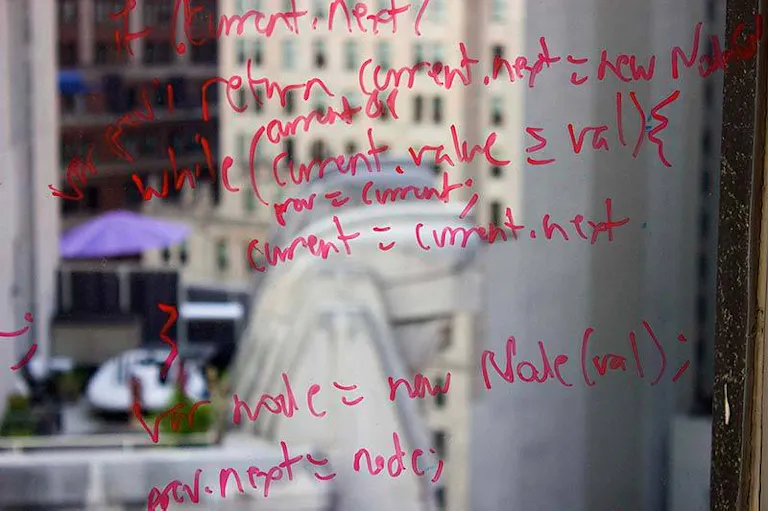

Intermediate-level programmers will benefit from the first four chapters of this book, which include an overview of JavaScript syntax, functionality, loops, and arrays, and last but not least, atoms of the data structures (numbers, booleans, and strings).

The rest of the chapters are for advanced programmers, where you can find more about HTTP and Forms, the difference between POST and GET requests, and Node.js, which is a back-end JavaScript technology that executes code server-side.

Ready to break into tech?

Learn the #1 programming language in 13 to 22 weeks with our live online coding bootcamp.

Intermediate/Advanced Javascript Tutorials & Courses

1. Scotch.io

Scotch.io is a blog that provides educational tutorials for programmers of all levels. This is a great resource for intermediate and advanced students who are looking to increase their knowledge of the MEAN stack and other emerging JavaScript technologies. It touches on Angular.js (web application framework for front-end development maintained by Google) and the jQuery library.

2. Egghead.io

To paraphrase this website’s slogan: Life’s too short for any of those other resources on the internet—just watch these videos! Egghead offers short videos for proficient JavaScript developers to level-up their skills. Receive access to a decent number of lessons for free, or sign up for a paid subscription and get all the knowledge you can handle.

3. Douglas Crockford Videos

Learn JavaScript from one of the language’s foremost pioneers. While a Hawaiian-shirted employee at Yahoo, Douglas Crockford created this lecture series on the creation, rise to popularity, and implementation of JavaScript. These presentations are not only informative but entertaining and anecdotal too.

Next Steps on Your Journey to Javascript Mastery

If you’re a beginner who wants to learn JavaScript, working your way through this list is a great start. We recommend bookmarking this page for future reference.

If you’re looking to take the next step toward a career as a developer, check out the Fullstack Academy Coding Bootcamp, where we teach an award-winning JavaScript curriculum and excellent hiring outcomes for graduates.

Want more resources? Explore learning options with Fullstack Academy, or learn about the top programming languages to learn in 2024 (spoiler: JavaScript is #1).